Spark Driver Job

The Spark Driver Job, an integral component of Apache Spark's distributed computing framework, is a critical element in the efficient execution of data processing tasks. This role is responsible for orchestrating the execution of Spark applications, managing resources, and ensuring the smooth and efficient operation of Spark clusters. In this comprehensive guide, we will delve into the intricacies of the Spark Driver Job, exploring its functionality, architecture, and the key considerations for optimizing its performance.

Understanding the Spark Driver Job

At its core, the Spark Driver Job acts as the central coordinator for a Spark application. It is responsible for initializing the application, submitting tasks to executors, and managing the overall execution flow. The driver job is launched when a Spark application starts and remains active throughout the application’s lifecycle, overseeing the distributed computation process.

One of the key responsibilities of the Spark Driver Job is task scheduling. It receives the user's code and breaks it down into smaller tasks, optimizing their distribution across the cluster. This process involves considering factors such as data locality, resource availability, and task dependencies to ensure efficient and timely execution.

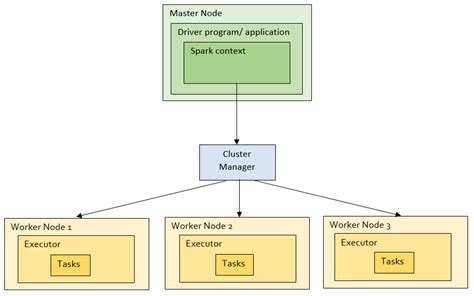

Architecture and Components

The architecture of the Spark Driver Job is designed to provide a robust and scalable foundation for distributed computing. It consists of several key components, each playing a specific role in the execution process:

- Driver Process: The heart of the Spark Driver Job, this process is responsible for managing the overall execution flow. It communicates with the cluster manager, submits tasks to executors, and handles the coordination of the application.

- Cluster Manager: The cluster manager is responsible for allocating resources within the cluster. It interacts with the driver process to provide the necessary resources for task execution, ensuring efficient utilization of the cluster's capabilities.

- Executors: Executors are the workers in the Spark cluster that perform the actual data processing tasks. They receive tasks from the driver process, execute them, and return the results. Executors are typically distributed across the cluster to maximize parallelism and performance.

- Task Scheduler: The task scheduler is a critical component within the driver process. It is responsible for optimizing task distribution, considering factors such as data partitioning, task dependencies, and resource availability. The task scheduler ensures that tasks are scheduled efficiently, minimizing overhead and maximizing throughput.

Performance Optimization Techniques

Optimizing the performance of the Spark Driver Job is crucial for achieving efficient and scalable data processing. Here are some key techniques and considerations to enhance the performance of the driver job:

Resource Allocation

Efficient resource allocation is essential for maximizing the performance of the Spark Driver Job. This involves determining the optimal number of executors, setting appropriate resource limits, and ensuring that the cluster is properly configured. By fine-tuning resource allocation, the driver job can make the most of the available resources, minimizing bottlenecks and maximizing throughput.

Data Locality and Partitioning

Data locality plays a significant role in the performance of distributed computing systems like Spark. The Spark Driver Job should leverage data locality to reduce network overhead and improve performance. By partitioning data appropriately and ensuring that tasks are scheduled on nodes where the relevant data resides, the driver job can minimize data movement and maximize efficiency.

| Data Partitioning Strategy | Benefits |

|---|---|

| Hash Partitioning | Balances data across partitions and ensures even distribution. |

| Range Partitioning | Allows for efficient filtering and sorting based on key ranges. |

| Round-Robin Partitioning | Provides a simple and uniform distribution of data. |

Task Scheduling Strategies

The task scheduling strategy employed by the Spark Driver Job can significantly impact performance. Dynamic task scheduling, which adapts to changing cluster conditions and resource availability, can lead to better utilization and improved throughput. Additionally, considering task dependencies and prioritizing critical paths can further enhance the overall efficiency of the application.

Dynamic Resource Scaling

In dynamic environments, the ability to scale resources up or down based on workload demands is crucial. The Spark Driver Job should be designed to support dynamic resource scaling, allowing the cluster to adapt to changing data processing needs. This ensures that resources are allocated efficiently, avoiding underutilization or resource contention.

Real-World Performance Analysis

To gain a deeper understanding of the Spark Driver Job’s performance, let’s examine a real-world case study. Consider a large-scale data processing application running on a Spark cluster with 100 executors. The application processes a massive dataset, performing complex analytics and machine learning tasks.

In this scenario, the Spark Driver Job played a crucial role in optimizing the execution flow. By leveraging data locality and employing efficient task scheduling strategies, the driver job minimized data movement and ensured that tasks were executed in a timely manner. The dynamic resource scaling capabilities of the driver job allowed the cluster to adapt to the varying workload demands, maximizing resource utilization and overall performance.

Performance Metrics

Here are some key performance metrics that can be analyzed to evaluate the effectiveness of the Spark Driver Job:

- Task Completion Time: The average time taken to complete a task, providing insights into the efficiency of task execution.

- Resource Utilization: Measuring the utilization of cluster resources, including CPU, memory, and network bandwidth, to ensure optimal resource allocation.

- Throughput: The rate at which tasks are completed, indicating the overall processing capacity of the cluster.

- Data Transfer Overhead: Monitoring the amount of data transferred between nodes to minimize network overhead and improve performance.

Future Implications and Innovations

As Spark continues to evolve, the Spark Driver Job is likely to see significant advancements and innovations. Here are some potential future developments:

- Intelligent Task Scheduling: The development of machine learning-based task scheduling algorithms could revolutionize the efficiency of the Spark Driver Job. These algorithms could dynamically adapt to changing workloads, optimizing task distribution and minimizing overhead.

- Autoscaling: The integration of autoscaling capabilities could enable the Spark Driver Job to automatically adjust resource allocation based on real-time workload demands. This would further enhance the efficiency and scalability of Spark applications.

- Hybrid Execution Models: Exploring hybrid execution models that combine the strengths of different distributed computing frameworks could provide new opportunities for optimizing the Spark Driver Job. This could involve integrating Spark with other technologies like Hadoop or integrating Spark with cloud-native platforms.

Frequently Asked Questions

How does the Spark Driver Job handle fault tolerance and recovery?

+The Spark Driver Job employs fault tolerance mechanisms to handle failures and ensure reliable execution. It maintains checkpoint data and can recover from failures by re-executing tasks that were affected. This ensures that the application can continue even in the face of node failures or other unexpected events.

Can the Spark Driver Job be optimized for specific workloads or use cases?

+Absolutely! The Spark Driver Job can be optimized for specific workloads by tuning parameters such as resource allocation, task scheduling strategies, and data partitioning. By understanding the characteristics of the workload, the driver job can be tailored to maximize performance and efficiency for that particular use case.

What are some common challenges faced when optimizing the Spark Driver Job?

+Common challenges include determining the optimal resource allocation, managing data locality effectively, and handling task dependencies efficiently. Additionally, ensuring the driver job is resilient to failures and can recover gracefully is crucial for maintaining the reliability of Spark applications.